If you think we are exaggerating with that headline, to quote Otis Redding: ‘I’m going to prove every word I say.’

BREAKING: SCOTUS just ruled in favor of Twitter in Twitter v Taamneh. Gonzalez vacated. Clean sweep. No mention of #Section230. More details to come.

— Jess Miers 🦝 (@jess_miers) May 18, 2023

Taamneh – plaintiffs failed to state a claim under the ATA https://t.co/zoSVy3g7wP

— nicole saad bembridge (@nsaadbembridge) May 18, 2023

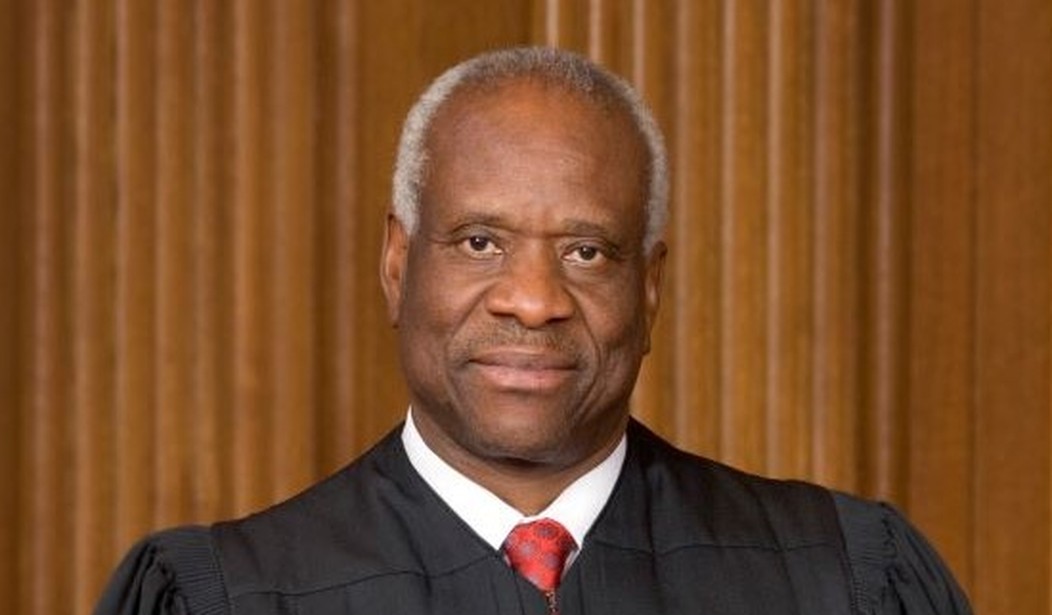

We are talking about Twitter, Inc. v. Taamneh, et al (2023), a case decided yesterday. The decision was unanimous with Clarence Thomas writing the opinion of the court. We already told you about the ‘cat fight’ that broke out over Prince and Andy Warhol, but this case is far more important to public discourse.

Let’s run down the facts for a moment. ISIS bombed a nightclub in Turkey, killing several people. The families of one of the victims then sued Twitter, Facebook and Google (as owner of YouTube) for allegedly aiding and abetting ISIS. Under federal law, anyone who aids and abets in terrorism can be sued for the terrorism, more or less.

Also, if you read the case on your own, bear in mind that for procedural reasons, at this stage of the case, the court has to assume every fact alleged by the plaintiffs are true—and there are only a few narrow exceptions to this rule. So even if Twitter, etc. can prove that some of these allegations are lies, the courts are generally going to pretend it is true at this stage. Challenges to factual claims come later. Naturally, the court know that this might not be the actual truth, and you should bear that in mind, too.

So allegedly these social media platforms aided and abetted the attack this way:

As plaintiffs allege, ISIS and its adherents have used these platforms for years as tools for recruiting, fundraising, and spreading their propaganda. Like many others around the world, ISIS and its supporters opened accounts on Facebook, YouTube, and Twitter and uploaded videos and messages for others to see. Like most other content on those platforms, ISIS’ videos and messages were then matched with other users based on those users’ information and use history. And, like most other content, advertisements were displayed with ISIS’ messages, posts, and videos based on information about the viewer and the content being viewed. Unlike most other content, however, ISIS’ videos and messages celebrated terrorism and recruited new terrorists. For example, ISIS uploaded videos that fundraised for weapons of terror and that showed brutal executions of soldiers and civilians alike. And plaintiffs allege that these platforms have been crucial to ISIS’ growth, allowing it to reach new audiences, gain new members, and spread its message of terror.

They also allege that these companies failed to moderate these ISIS accounts and then they get into some special allegations related to Google we will talk about later—although they are interesting.

But returning to just the allegations against Twitter and Facebook, let’s stop and think about the implications of this. Right now, we are talking about terrorism, but a person’s speech can create liability in many different ways and aiding and abetting liability can be applied to most, if not all of them. Take the simple case of defamation. As the court says

It appears that for every minute of the day, approximately 500 hours of video are uploaded to YouTube, 510,000 comments are posted on Facebook, and 347,000 tweets are sent on Twitter.

There is simply no way that those platforms could monitor every user’s content to make sure they are not saying anything defamatory. For instance, suppose John Jones said hypothetically on Twitter that ‘Jane Smith is a drunk and an adulterer.’ Assuming that Jane Smith is not a famous person, how would Twitter even know whether or not it is true? Yet if merely letting a person Tweet is enough to make Twitter an aider and abettor, then Twitter would have no choice but to either 1) investigate every factual allegation made by any Twitter user before the tweet is sent out, or 2) refuse to let ordinary people tweet out any potentially defamatory tweet. But what social media platform could afford to take option #1?

Nor would it be limited to Twitter, Facebook and YouTube. Every blogging platform would face the same choice. So would every comment section. Ditto for Amazon product reviews. It is not an exaggeration to say that if the Supreme Court said that Twitter, etc. were liable, that pretty much all user-generated content would shut down. So Elie Mystal (who is a man) is not exaggerating very much in the first sentence of this tweet:

The Supreme Court dropped its big Section 230 cases today and it unanimously decided to let the internet live.

But I'm really digging Justice Jackson's 2 paragraph concurrence which I'll summarize as her saying: "Hey, Elon, we're still watching. Don't act a fool."— Elie Mystal (@ElieNYC) May 18, 2023

We disagree with him a lot and make fun of him, a lot. But his comedic exaggeration is not much of an exaggeration. Yes, the large companies like the Washington Post or ABC News will continue to post on Internet like before because they are already geared up to defend themselves from liability based on what their reporters say, but regular folks, the so-called ‘little people’ would pretty much be silenced because no one would be willing to take on the financial risk of letting regular people speak their minds. So, yes, less negative behavior like people jumping to apparently racist conclusions about pregnant healthcare workers, but you would lose positive conduct, like when lowly bloggers proved that Dan Rather was peddling fake memos.

Didn’t we promise we would vindicate the headline? There’s your proof that Twitter was saved and the only arguable exaggeration is that it wasn’t really Thomas alone, since it was a unanimous decision.

Of course, one solution to this problem is also controversial: Section 230.

This Tweet links to the statutory text:

Really? Can you show me in the text of section 230 where it backs up what you say?

Need a link?https://t.co/y79iwKa941 https://t.co/mYUfMwapZm

— Section 230 (@Section_230) May 19, 2023

For the most part the gist of the text of Section 230 is that if you go on the Internet and you commit a crime or a tort only you are responsible for it. (A ‘tort’ is a civil wrong—as opposed to a criminal wrong.) Not the social media platform, not the Washington Post for letting you comment on an article or Twitchy for letting you comment on our posts.

(Of course, none of this is should be taken as legal advice.)

That’s not too controversial. The controversial part is that it also says that if these forums moderate their content, that doesn’t change the fact that if you commit a crime or a tort, only you are responsible for it. That has the effect of freeing these entities to moderate their comments in ways that the government couldn’t under the First Amendment, without risking liability for any criminal or tortious expression.

And to be fair, it does create an incongruity. When Facebook is sued because they allowed someone to defame them on their platform, they say to that court ‘we’re not responsible for whatever this person said on Facebook. We didn’t say it.’ But then if a state attempts to limit their ability to moderate, Facebook turns around and says, ‘you can’t tell us we have to let a person onto our platform. That would violate our First Amendment rights, because allowing a person on our platform or refusing to is expressive activity!’ Section 230 lets them kind of talk out of both sides of their mouth.

The essential problem, here, is for liability purposes these platforms want to be no different than the paper and ink companies in tort cases. If Mary buys ink and paper at Staples and then prints flyers that defame someone, Staples isn’t liable, and neither are the people who manufactured the ink and paper. But then again, those companies doesn’t try to tell you what you can and can’t say with that ink and paper—partially because they have no practical way to monitor you. But the Internet can largely be monitored and there is a very real risk that at some point no one will be able to say anything on the Internet at all unless it is approved by various ‘owners.’ That might not technically violate the First Amendment but it might completely frustrate the goals of the Founders in writing it: To ensure that the people can have a robust debate on virtually any topic. As we have said before, this ability to debate is absolutely crucial to even have a true republic.

But in Taamneh, Section 230 wasn’t even mentioned. Instead, what the Supreme Court said was that all that Twitter and Facebook were doing was setting up a program that literally anyone could use, for good or ill. We wouldn’t hold Starbucks liable if a person bought a coffee and then threw it into another person’s face (which would be a battery). Instead, you needed more:

None of those allegations suggest that defendants culpably ‘associate[d themselves] with’ the Reina attack, ‘participate[d] in it as something that [they] wishe[d] to bring about,’ or sought ‘by [their] action to make it succeed.’ In part, that is because the only affirmative ‘conduct’ defendants allegedly undertook was creating their platforms and setting up their algorithms to display content relevant to user inputs and user history.

So, the Supreme Court didn’t get in any direct way at Section 230 at all, a fact noted by many:

Supreme Court shields Twitter from liability for terror-related content and leaves Section 230 untouched https://t.co/hjKPRd9PGF

— David Arnold (@David_M_Arnold) May 19, 2023

“Supreme Court’s Social Media Ruling Is a Temporary Reprieve; Google and Twitter have prevailed for now, but there are plenty of Section 230 skeptics at the high court — and in Congress”: Law professor Stephen L. Carter has this essay online at Bloomberg https://t.co/Vf4ozB3lnY

— Howard Bashman (@howappealing) May 19, 2023

In a win for big tech, a ruling by the Supreme Court Thursday sided with social media companies Twitter, Google and Facebook, shielding them from being held liable for aiding and abetting terrorism through content posted to their platforms. https://t.co/m3aKxbkCco

— Iowa's News Now (@iowasnewsnow) May 19, 2023

Supreme Court ruling continues to protect Google, Facebook and Twitter from what users post https://t.co/FvyOZj5sSj

— Stephen Johnson (@Stephen12432627) May 19, 2023

That’s kind of a bad way to put it but you can probably understand what he is trying to say.

OMG @elonmusk CONTROLS THE SUPREME COURT!!! – @mehdirhasan, probably https://t.co/rBRNMFvKRE

— GayPatriot (@GayPatriot) May 18, 2023

Heh. Speaking of moderation, we are so glad he’s back.

Supreme Court sides with social media companies, keeps Section 230 in placehttps://t.co/nbLivVOpfN

— Jack Poso 🇺🇸 (@JackPosobiec) May 18, 2023

On the surface, it is better to say they ignored it.

Supreme Court rules against reexamining Section 230 https://t.co/nDk05e1Yhb

— Farhan Rasheed (@Farhann8) May 18, 2023

That is not a good take. The better take is that they didn’t look at any issues related to Section 230 because they were ruling that Twitter, etc., wouldn’t normally be liable, anyway. Section 230 is best understood as an exception to any liability rules that might purport to hold those companies liable. But they don’t need an exception to the rule unless the rule works against them in the first place.

Another smackdown of the 9th Circuit.

"Supreme Court Rules in Favor of Twitter, Google, Facebook in Liability Case Over User-Posted Content" https://t.co/nUVoyxgWvn

— Elaine 🇺🇸 (@ElaineSoCalGov) May 19, 2023

Yes, the fact that the Ninth Circuit is reversed by the Supreme Court at a very high rate has been a running joke for decades, at least among nerdy law types.

So, in our opinion, Twitter and Facebook are probably out of the woods. But Google might have more trouble. When we were discussing the facts, we told you were leaving out something and would come back to it. This is what we were leaving out:

Plaintiffs also provide a set of allegations specific to Google. According to plaintiffs, Google has established a system that shares revenue gained from certain advertisements on YouTube with users who posted the videos watched with the advertisement. As part of that system, Google allegedly reviews and approves certain videos before Google permits ads to accompany that video. Plaintiffs allege that Google has reviewed and approved at least some ISIS videos under that system, thereby sharing some amount of revenue with ISIS.

And yes, dear reader, if that suggests that Google might be in trouble, you’re not wrong. Now, Google won in the Supreme Court, but look at why they won:

The complaint here alleges nothing about the amount of money that Google supposedly shared with ISIS, the number of accounts approved for revenue sharing, or the content of the videos that were approved. It thus could be the case that Google approved only one ISIS-related video and shared only $50 with someone affiliated with ISIS; the complaint simply does not say, nor does it give any other reason to view Google’s revenue sharing as substantial assistance. Without more, plaintiffs thus have not plausibly alleged that Google knowingly provided substantial assistance to the Reina attack, let alone (as their theory of liability requires) every single terrorist act committed by ISIS.

Therefore, right now they didn’t allege enough details in their complaint to hold Google liable. But complaints can be amended and when discussing a companion case…

Great news for the future of the internet: the Supreme Court gets about as hands off as it can possibly get on Section 230 in a 2.5 page per curium decision in Gonzalez v. Google.

Opinion here: https://t.co/h2rNGTp0mE

— Kate Klonick (@Klonick) May 18, 2023

…dealing pretty much the same kinds of issues, the Supreme Court says specifically they are not deciding whether or not the plaintiff will be allowed to amend their complaint. But as a practical matter, they are almost always allowed to do so and who knows what they might allege if they do. So, Google really might not be out of the woods yet.

And to circle back a bit, let’s talk about the second sentence in this tweet from Mad Scientist Fat Albert:

The Supreme Court dropped its big Section 230 cases today and it unanimously decided to let the internet live.

But I'm really digging Justice Jackson's 2 paragraph concurrence which I'll summarize as her saying: "Hey, Elon, we're still watching. Don't act a fool."— Elie Mystal (@ElieNYC) May 18, 2023

A concurrence is an opinion that agrees with at least the result of the majority opinion but wants to say something extra. Sometimes that something extra is to indicate that they only agree with the majority’s bottom line but arrive at the conclusion by a different route, and sometimes they just want to clarify how they personally see the law.

Mr. Mystal thinks Justice Jackson is throwing shade at Elon Musk, but we really don’t see it. In fact, when the first paragraph says ‘Other cases presenting different allegations and different records may lead to different conclusions’ she is more likely to be talking about YouTube and the monetization issue than Twitter. Or she might be talking about completely different platforms, like Blogspot. We see no reason to think that is about Musk’s Twitter 2.0.

And the second paragraph just talks about how the general principles of aiding and abetting in tort law might not apply the same in other contexts. We are more likely to think she is talking about gun manufacturers than Elon Musk. Mystal seems to be suffering from monomania.

And as a final note, that Otis Redding quote? That’s from a song you are more likely to remember from The Black Crows than Mr. Redding:

Otis Redding – Hard To Handle https://t.co/fmKKAUrsL9 pic.twitter.com/7lufCQfewl

— A-Fujimoto (@dfv1nj9qfBdImTT) September 20, 2022

Nothing wrong with jamming to a classic.

***

Editor’s Note: Do you enjoy Twitchy’s conservative reporting taking on the radical left and woke media? Support our work so that we can continue to bring you the truth. Join Twitchy VIP and use the promo code SAVEAMERICA to get 40% off your VIP membership!

Join the conversation as a VIP Member